Two senior members of the IEEE, J.H. Saltzer and M.D. Schroeder, wrote a paper titled “The Protection of Information in Computer Systems.” In this tutorial paper, Saltzer and Schroeder write in depth on architectural design and best practice principles for protecting information within information systems. Even though “The Protection of Information in Computer Systems” was published in 1975, this pioneering work is still surprisingly relevant and is often covered in university curriculums on computer science.

Saltzer and Schroeder published a set of eight architectural principles that embody secure systems design, including the following:

- Economy of Mechanism

- Fail Safe Defaults

- Complete Mediation

- Open Design

- Separation of Privilege

- Least Privilege

- Least Common Mechanism

- Psychological Acceptability

Saltzer and Schroeder added two further design principles derived from an analysis of traditional physical security systems. By their own analysis in subsequent publications, these last two principles “apply only imperfectly to computer systems”:

- Work Factor

- Compromise Recording

Through many waves of innovation and leaps of technological advancement, many of Saltzer and Schroeder these principles still hold on as staples of computer security. The following sections explore each of them individually.

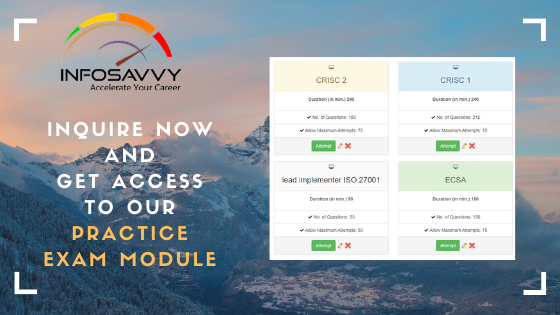

Related Product : EC-Council Certified Incident Handler | ECIH v2

Economy of Mechanism

Complexity is the enemy of security. The simpler and smaller the system, the easier it is to design, assess, and test. When the system as a whole cannot be simplified sufficiently, consider partitioning the problem so that the components with the most significant risks are separated and simplified to the extent possible. This is the concept behind a security kernel—a small separate subsystem with the security-critical components that the rest of

Information security and cryptography expert Bruce Schneier stated (https://www .schneier.com/news/archives/2016/04/bruce_schneier_build.html):

Complexity is the worst enemy of security. The more complex you make your system, the less secure it’s going to be, because you’ll have more vulnerabilities and make more mistakes somewhere in the system. … The simpler we can make systems, the more secure they are.

By separating security functionality into small isolated components, the task of carefully reviewing and testing the code for security vulnerabilities can be significantly reduced.

Fail-Safe Defaults

Design security controls so that in the absence of specific configuration settings to the contrary, the default is not to permit the action. Access should be based on permission (e.g. white-listing) not exclusion (e.g. black-listing). Saltzer and Schroeder this is the principle behind “Deny All” default firewall rules.

The more general concept is fail-safe operation. Design systems so that if an error is detected, the system fails in a deny (or safe) state of higher security.

Complete Mediation

The concept of complete mediation means that every access to every object is checked every time. This means your system must make it possible for the identity and authorization of every request to be authenticated at every step. It matters little if you have a provably correct security kernel for authenticating requests if there are paths that can be exploited to bypass those checks.

It is important to be careful to avoid security compromises due to differences between the time the access authorization is checked and the time of the access itself. For example, in a situation in which access authorization is checked once and then cached, the owner of the object being accessed could change access permissions but the previously cached authorization would continue to be used, resulting in a security compromise.

A more insidious attack is referred to as a time-of-check, time-of-use (TOCTOU) vulnerability. Consider the following pseudo-code on a system that permits symbolic links (i.e. user-created filenames that can refer to another file):

if (check_file_permission(file_name, user) == OK) then

Perform_file_action(file_name);

The attacker creates a file they have permission to access, invokes a system function that contains the above code, and simultaneously runs a program to replace the file with a symbolic link of the same name that points to a protected file they do not have access to.

Should the system run the program between the time of the check_file_permission call and the perform_file_action call, the user will be able to compromise the protected file.

Preventing a TOCTOU attack can be challenging and depends upon support for atomic transactions from the underlying operating system or hardware.

If, for performance reasons, assumptions must be made (such as relying upon the authentication performed by another layer), these assumptions must be documented and carefully reviewed to ensure there is not a path that bypasses the earlier authentication step and presents an unauthenticated request to a service that erroneously assumes it has been validated.

In general, complete mediation means access rights are validated for every attempt to access a resource. If access is not validated or followed through, then any change in access, notably a more restrictive change, is not observed and not enforced, which in turn would lead to unauthorized access.

Open Design

Do not rely solely upon security through obscurity. Security should depend upon keeping keys or passwords secret, not the design of the system. This is not to say that your security architecture should be published publicly—security through obscurity is still security, just that it ought not to be relied upon in and of itself. In the field of cryptography this principle was first associated with the Dutch cryptographer Auguste Kerckhoffs. Kerckhoffs’s principle basically states that a cryptographic method ought to be secure even if everything about the system, except the key, is known by the attacker.

In the case of cryptography, the danger of security through obscurity (i.e. using a cryptographic algorithm that is not widely known) is that there is no way to prove a cipher secure. The only way we have confidence that, for example, the Advanced Encryption Standard (AES) algorithm is secure is that all of the details related to its operation and design have been published, enabling researchers around the world to try to find flaws that would enable it to be compromised. Since no significant flaws have been found after many years, we can say that AES is unlikely to be able to be broken using today’s technology (or, indeed, the technology anticipated over the next decade or two).

Open design also means taking care to document the assumptions upon which the security of the system relies. Often it is undocumented assumptions that are implicitly relied upon, and which later turn out not to be true, that cause grief. By surfacing and documenting these assumptions, one can take steps to ensure they remain true and can continue to be relied upon.

Separation of Privilege

Separation of privilege requires two (or more) actions, actors, or components to operate in a coordinated manner to perform a security-sensitive operation. This control, adopted from the financial accounting practice, has been a foundational protection against fraud for years. Breaking up a process into multiple steps performed by different individuals (segregation of duties), or requiring two individuals to perform a single operation together (dual control), forces the malicious insider to collude with others to compromise the system. Separation of privilege is more commonly called separation (or segregation) of duties.

Security controls that require the active participation of two individuals are more robust and less susceptible to failure than those that do not. While not every control is suitable for separation of privilege, nor does every risk mandate such security, the redundancy that comes from separation of privilege makes security less likely to be compromised by a single mistake (or rogue actor).

Separation of privilege can also be viewed as a defense-in-depth control: permission for sensitive operations should not depend on a single condition. The concept is more fully discussed in the “Key Management Practices” section.

Least Privilege

Every process, service, or individual ought to operate with only those permissions absolutely necessary to perform the authorized activities and only for as long as necessary and no more. By limiting permissions, one limits the damage that can be done should a mistake, defect, or compromise cause an undesired action to be attempted. Granting permissions based on the principle of least privilege is the implementation of the concept of need-to-know, restricting access and knowledge to only those items necessary for the authorized task.

We see this in practice, for example, with the Linux sudo command, which temporarily elevates a user’s permission to perform a privileged operation. This allows the user to perform a task requiring additional permissions only for the period of time necessary to perform the task. Properly configured, authorized users may “sudo” as a privileged user (other than root) to perform functions specific to certain services. For example, sudo as “lp” to manage the printer daemon. This is especially valid when it is restricted to privileged accounts related to specific services, but not used to access the root account. The increased security comes from not allowing the user to operate for extended periods with unneeded permissions.

Least Common Mechanism

Minimize the sharing of components between users, especially when the component must be used by all users. Such sharing can give rise to a single point of failure.

Another way of expressing this is to be wary of transitive trust. Transitive trust is the situation in which A trusts B, and B (possibly unknown to A) trusts C, so A ends up trusting C. For example, you conduct online financial transactions with your bank, whom you trust. Your bank needs a website certificate in order to enable secure HTTPS connections, and so selects (trusts) a certificate authority (CA) to provide it. Your security now depends upon the security of that CA because, if compromised, your banking transactions could be compromised by a man-in-the-middle (MitM) attack (more on this in the “Public Key Infrastructure [PKI]” section). You trusted your bank, who trusted the CA — so you end up having to trust the CA without having any direct say in the matter.

Transitive trust is often unavoidable, but in such cases it ought to be identified and evaluated as a risk. Other design practices can then be applied to the circumstance to reduce the risk to acceptable levels (as discussed with open design earlier).

Of course, as with most Saltzer and Schroeder principles, the applicability of least common mechanism is not universal. In the case of access control, for example, it is preferable to have a single well-designed and thoroughly reviewed and tested library to validate access requests than for each subsystem to implement its own access control functions.

Psychological Acceptability

The best security control is of little value if users bypass it, work around it, or ignore it. If the security control’s purpose and method is obscure or poorly understood, it is more likely to be either ignored or misused. Security begins and ends with humans, and security controls designed without considering human factors are less likely to be successful.

The security architect has to walk the fine line between too few security controls and too many, such that some are ignored — or worse, actively defeated — by people just trying to get their jobs done.

Saltzer and Schroeder principle has also been called the principle of least astonishment — systems ought to operate in a manner which users expect. Commands and configuration options that behave in unexpected ways are more likely to be incorrectly used because some of their effects might not be anticipated by users.

Saltzer and Schroeder have suggested two other controls from the realm of physical security: work factor and compromise recording.

Work Factor

Saltzer and Schroeder refers to the degree of effort required to compromise the security control. This means comparing the cost of defeating security with (a) the value of the asset being protected, and (b) the anticipated resources available to the attacker. This is most often considered in the context of encryption and brute-force attacks. For example, if an encryption key is only 16 bits long, the attacker only has 65,536 (6 x 104) different keys to try. A modern computer can try every possible key in a matter of seconds. If the key is 256 bits long, the attacker has 1.2 x 1077 keys to try. The same computer working on this problem will require more time than the universe has been in existence.

Compromise Recording

Saltzer and Schroeder sometimes it is better just to record that a security compromise has occurred than to expend the effort to prevent all possible attacks. In situations in which preventative controls are unlikely to be sufficient, consider deploying detective controls so that if security is breached (a) the damage might be able to be contained or limited by prompt incident response and (b) evidence of the perpetrator’s identity might be captured. The only thing more damaging than a security breach is an undetected security breach.

Closed circuit TV (CCTV) cameras are an example of a detective control when it comes to physical security. For network security, a network tap with packet capture would be com- parable; they don’t stop breaches, but can provide invaluable information once a breach has been detected.

This is related to the concept of “assumption of breach.” Design your system not only to be secure, but on the assumption it can be breached, and consider mechanisms to ensure that breaches are quickly detected so that the damage can be minimized.

Follow Us

https://www.facebook.com/INF0SAVVY

https://www.linkedin.com/company/14639279/admin/