ISO/IEC 19249 in 2017 the International Organization for Standardization (ISO) published its first revision of standard, “Information technology — Security techniques — Catalogue of architectural and design principles for secure products, systems and applications.” The aim of ISO/IEC 19249 is to describe architectural and design principles to be used to foster the secure development of systems and applications. ISO/IEC 19249 specifies five architectural principles and five design principles.

The five architectural principles from ISO/IEC 19249 are:

- Domain separation

- Layering

- Encapsulation

- Redundancy

- Virtualization

The five design principles from ISO/IEC 19249 are:

- Least privilege

- Attack surface minimization

- Centralized parameter validation

- Centralized general security services

- Preparing for error and exception handling

These architectural and design principles build on existing concepts and reflect a number of new approaches to security theory. The following sections examine each of these architectural and design principles.

ISO/IEC 19249 Architectural Principles

In the introductory text of ISO/IEC 19249’s architectural principles section, the technical specification describes the primary challenge that all information security professionals know well: finding the difficult balance between security and functionality. The specification proposes that the way to secure any system, project, or application is to first adopt its five architectural principles and then approach the easier challenge of finding the balance between functionality and security for each principle. ISO/IEC 19249’s architectural principles are examined in the following five sections.

Domain Separation

A domain is a concept that describes enclosing a group of components together as a common entity. As a common entity, these components, be they resources, data, or applications, can be assigned a common set of security attributes. The principle of domain separation involves:

- Placing components that share similar security attributes, such as privileges and access rights, in a That domain can then be assigned the necessary controls deemed pertinent to its components.

- Only permitting separate domains to communicate over well-defined and (completely) mediated communication channels (e.g., APIs).

In networking, the principle of domain separation can be implemented through net- work segmentation – putting devices which share similar access privileges on the same distinct network, connected to other network segments using a firewall or other device to mediate access between segments (domains).

Of particular concern are systems in which privileges are not static – situations in which components of a single domain, or entire domains, have privileges and access permissions that can change dynamically. These designs need to be treated particularly carefully to ensure that the appropriate mediations occur and that TOCTOU vulnerabilities are avoided.

Examples where domain separation is used in the real world include the following:

- A network is separated into manageable and logical Network traffic (inter-domain communication) is handled according to policy and routing control, based on the trust level and work flow between segments.

- Data is separated into domains in the context of classification level. Even though data might come from disparate sources, if that data is classified at the same level, the handling and security of that classification level (domain) is accomplished with like security attributes.

Related Product : EC-Council Certified Incident Handler | ECIH v2

Layering

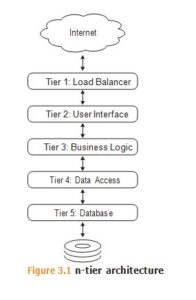

Layering is the hierarchical structuring of a system into different levels of abstraction, with higher levels relying upon services and functions provided by lower levels, and lower levels hiding (or abstracting) details of the underlying implementation from higher levels.

Layering is seen in network protocols, starting with the classic OSI seven-layer model running from physical through to application layers.

In software systems one encounters operating system calls, upon which libraries are built, upon which we build our programs. Within the operating system, higher level functions (such as filesystem functions) are built upon lower level functions (such as block disk I/O functions).

In web applications we see this principle in the n-tier architecture illustrated in Figure 3.1.

The purpose of layering is to:

- Create the ability to impose specific security policies at each layer

- Simplify functionality so that the correctness of its operation is more easily validated

From a security perspective:

- Higher levels always have the same or less privilege than a lower level

- If layering to provide security controls, it must not be possible for a higher level to bypass an intermediate level. For example, if a program is able to bypass the filesystem layer and issue direct block-level I/O requests to the underlying disk storage device, then the security policies (i.e. file permissions) enforced by the filesystem layer will be for naught.

Layering and domain separation are related techniques and work well together. A single domain might have multiple layers to assist in structuring functions and validating correctness. Alternatively, different layers might be implemented as different domains. Or a combination of the above might apply.

An example where layering is used in the real world is a filesystem. The lowest layer, access to the raw disk, provides only basic protection to the disk sectors. The next layer might be the virtual or logical partitioning of the disk. Its security assurance would be access to those disk partitions or in the form of certain data integrity or high availability functions. Still, higher layers would be the filesystems as users browse the data, employ- ing advanced access control features.

Encapsulation

Encapsulation is an architectural concept where objects are accessed only through functions which logically separate functions that are abstracted from their underlying object by inclusion or information hiding within higher level objects. The functions might be specific to accessing or changing attributes about that object. The encapsulation functions can define the security policy for that object and mediate all operations on that object. As a whole, those functions act as sort of an agent for the object.

Proper encapsulation requires that all access or manipulation of the encapsulated object must go through the encapsulation functions, and that it is not possible to tamper with the encapsulation of the object or the security attributes (e.g., permissions) of the encapsulation functions.

Device drivers can be considered to use a form of encapsulation in which a simpler and consistent interface is provided that hides the details of a particular device, as well as the differences between similar devices. Forcing interactions to occur through the abstract object increases the assurance that information flows conform to the expected inputs and outputs.

An example where encapsulation is used in the real world is the use of the setuid bit. Typically, in Linux or any Unix-like operating system, a file has ownership based on the person who created it. And an application runs based on the person who launched it.

But a special mechanism, setuid, allows for a file or object to be set with different privileges. Setting the setuid bit on a file will cause it to open with the permission of whatever account you set it to be. The setuid bit controls access, above and beyond the typical operation. That is an example of encapsulation.

Redundancy

Redundancy is designing a system with replicated components so that the system can continue to operate in spite of errors or excessive load. From a security perspective, redundancy is an architectural principle for addressing possible availability compromises.

In the case of replicated data stores, the particular challenge is to ensure consistency.

State changes to one data store must be reliably replicated across all redundant data stores, or the purpose of redundancy is defeated and potential security vulnerabilities created.

For redundancy to work, it must be possible for the overall system to detect errors in one of the replicated subsystems. Once an error is detected, that error may be eliminated or the error triggers a failover to the redundant subsystem. How any particular error is handled depends upon the capabilities of the overall system. In some cases it is sufficient merely to reject the operation and wait for the requester to reissue it (this time on one of the working remaining redundant systems). In other cases, it is necessary to reverse (roll back) intermediate state changes so that when the request is reattempted on a correctly functioning system, the overall system state is consistent.

An example of the first is a load balancer to a website. If it fails, and cannot process a request for a web page, it may be sufficient to fail the request and wait for the user to reload the page.

In a situation in which the request involves, for example, transferring funds from an account at one bank to another, if the funds have been deducted from the first account before the failure occurs, it is necessary to ensure that the deduction is reversed before failing and retrying the request.

Examples where redundancy is used in the real world include the following:

- High availability solutions such as a cluster, where one component or system takes over when its active partner becomes inaccessible

- Having storage in RAID configurations where the data is made redundant and fault tolerant

Virtualization

Virtualization is a form of emulation in which the functionality of one real or simulated device is emulated on a different one. (This is discussed in more detail later in the “Understand Security Capabilities of Information Systems” section.)

More commonly, virtualization is the provision of an environment that functions like a single dedicated computer environment but supports multiple such environments on the same physical hardware. The emulation can operate at the hardware level, in which case we speak of virtual machines, or the operating system level, in which case we speak of containers.

Virtualization is used extensively in the real world to make the most cost-effective use of resources and to scale up or down as business needs require.

ISO/IEC 19249 Design Principles

These design principles are meant to help identify and mitigate risk. Some of these five fundamental ideas can be directly associated with security properties of the target system or project, while others are generally applied.

ISO/IEC 19249 opens its discussion of the design principles by crediting the Saltzer and Schroeder paper mentioned earlier in the chapter. The technical specification follows that citation with the plain fact that, since ISO/IEC 1974, “IT products and systems have significantly grown in complexity.” Readers will understand that securing functionality requires comparable thought toward architecture and design. Following are the five ISO/ IEC 19249 design principles.

Least Privilege

Perhaps the most well-known concept of ISO/IEC 19249’s design principles, least privilege is the idea to keep the privileges of an application, user, or process to the minimal level that is necessary to perform the task.

The purpose of ISO/IEC this principle is to minimize damage, whether by accident or malicious act. Users should not feel any slight from having privileges reduced, since their liability is also reduced in the case where their own access is used without their authorization. Implementing the principle is not a reflection of distrust, but a safeguard against abuse.

Examples where least privilege is used in the real world include the following:

- A web server has only the privileges permitting access to necessary data.

- Applications do not run at highly privileged levels unless necessary.

- The marketing department of an organization has no access to the finance department’s server’s.

Attack Surface Minimization

A system’s attack surface is its services and interfaces that are accessible externally (to the system). Reducing the number of ways the system can be accessed can include disabling or blocking unneeded services and ports, using IP whitelisting to limit access to internal API calls that need not be publicly accessible, and so on.

System hardening, the disabling and/or removal of unneeded services and components, is a form of attack surface minimization. This can involve blocking networking ports, removing system daemons, and otherwise ensuring that the only services and programs that are available are the minimum set necessary for the system to function as required.

Reducing the number of unnecessary open ports and running applications is an obvious approach. But another, less frequently observed strategy for minimizing the attack surface is to reduce the complexity of necessary services. If a service or function of a system is required, perhaps the workflow or operation of that service can be “minimized” by simplifying it.

Examples where attack surface minimization is used in the real world include the following:

- Turning off unnecessary services

- Closing unneeded ports

- Filtering or screening traffic to only the required ports

- Reducing interface access to only administrator/privileged users

Centralized Parameter Validation

As will be discussed later in this chapter in the discussion of common system vulnerabilities, many threats involve systems accepting improper inputs. Since ensuring that parameters are valid is common across all components that process similar types of parameters, using a single library to validate those parameters enables the necessary capability to properly review and test that library.

Full parameter validation is especially important when dealing with user input, or input from systems to which users input data. Invalid or malformed data can be fed to the system, either unwittingly, by inept users, or by malicious attackers.

Examples where centralized parameter validation is used in the real world include the following:

- Validating input data by secure coding practices

- Screening data through an application firewall

Centralized General Security Services

The principle of centralizing security services can be implemented at several levels. At the operating system level, your access control, user authentication and authorization, logging, and key management are all examples of discrete security services that can and should be managed centrally. Simplifying your security services interface instead of man- aging multiple interfaces is a sensible benefit.

Implementing the principle at an operational or data flow level, one example is having a server dedicated for key management and processing of cryptographic data. The insecure scenario is one system sharing both front-end and cryptographic processing; if the front-end component were compromised, that would greatly raise the vulnerability of the cryptographic material.

The centralized general security services principle is a generalization of the previously discussed centralized parameter validation principle: by implementing commonly used security functions once, it is easier to ensure that the security controls have been properly reviewed and tested. It is also more cost-effective to concentrate one’s efforts on validating the correct operation of a few centralized services rather than on myriad implementations of what is essentially the same control.

Examples where centralized security services are used in the real world include the following:

- Centralized access control server

- Centralized cryptographic processing

- Security information and event management

Preparing for Error and Exception Handling

Errors happen. Systems must ensure that errors are detected and appropriate action taken, whether that is to just log the error or to take some action to mitigate the impact of the issue. Errors ought not to leak information, for example, by displaying stack traces or internal information in error reports that might disclose confidential information or provide information useful to an attacker. Systems must be designed to fail safe (as discussed earlier) and to always remain in a secure state, even when errors occur.

Errors can also be indicators of compromise, and detecting and reporting such errors can enable a quick response that limits the scope of the breach.

An example of where error and exception handling is used in the real world is developing applications to properly handle errors and respond with a corresponding action.

Follow Us

https://www.facebook.com/INF0SAVVY

https://www.linkedin.com/company/14639279/admin/