Amazon Simple Storage Service (S3) is where individuals, applications, and a long list of AWS services keep their data.

It’s an excellent platform for the following:

– Maintaining backup archives, log files, and disaster recovery images

– Running analytics on big data at rest

– Hosting static websites S3 provides inexpensive and reliable storage that can, if necessary, be closely integrated with operations running within or external to Amazon web services.

This isn’t the same as the operating system volumes you learned about in the previous chapter: those are kept on the block storage volumes driving your EC2 instances. S3, by contrast, provides a space for effectively unlimited object storage. What the difference is between object and block storage? With block-level storage, data on a raw physical storage device is divided into individual blocks whose use is managed by a file system. NTFS is a common file system used by Windows, while Linux might use Btrfs or ext4. The file system, on behalf of the installed OS, is responsible for allocating space for the files and data that are saved to the underlying device and for providing access whenever the OS needs to read some data. An object storage system like S3, on the other hand, provides what you can think of as a flat surface on which to store your data. This simple design avoids some of the OS-related complications of block storage and allows anyone easy access to any amount of professionally designed and maintained storage capacity. When you write files to S3, they’re stored along with up to 2 KB of metadata. The metadata is made up of keys that establish system details like data permissions and the appearance of a file system location within nested buckets.

Through the rest of this chapter, you’re going to learn the following:

– How S3 objects are saved, managed, and accessed

– How to choose from among the various classes of storage to get the right balance of durability, availability, and cost

– How to manage long-term data storage lifecycles by incorporating Amazon Glacier into your design

– What other Amazon web services exist to help you with your data storage and access operations

S3 Service Architecture

You organize your S3 files into buckets. By default, you’re allowed to create as many as 100 buckets for each of your AWS accounts. As with other AWS services, you can ask AWS to raise that limit. Although an S3 bucket and its contents exist within only a single AWS region, the name you choose for your bucket must be globally unique within the entire S3 system. There’s some logic to this: you’ll often want your data located in a particular geographical region to satisfy operational or regulatory needs. But at the same time, being able to reference a bucket without having to specify its region simplifies the process.

Prefixes and Delimiters

As you’ve seen, S3 stores objects within a bucket on a flat surface without subfolder hierarchies. However, you can use prefixes and delimiters to give your buckets the appearance of a more structured organization. A prefix is a common text string that indicates an organization level. For example, the word contracts when followed by the delimiter / would tell S3 to treat a file with a name like contracts/acme.pdf as an object that should be grouped together with a second file named contracts/dynamic.pdf. S3 recognizes folder/directory structures as they’re uploaded and emulates their hierarchical design within the bucket, automatically converting slashes to delimiters. That’s why you’ll see the correct folders whenever you view your S3-based objects through the console or the API

Working with Large Objects

While there’s no theoretical limit to the total amount of data you can store within a bucket, a single object may be no larger than 5 TB. Individual uploads can be no larger than 5 GB. To reduce the risk of data loss or aborted uploads, AWS recommends that you use a feature called Multipart Upload for any object larger than 100 MB. As the name suggests, Multipart Upload breaks a large object into multiple smaller parts and transmits them individually to their S3 target. If one transmission should fail, it can be repeated without impacting the others. Multipart Upload will be used automatically when the upload is initiated by the AWS CLI or a high-level API, but you’ll need to manually break up your object if you’re working with a low-level API. An application programming interface (API) is a programmatic interface through which operations can be run through code or from the command line. AWS maintains APIs as the primary method of administration for each of its services. AWS provides low-level APIs for cases when your S3 uploads require hands-on customization, and it provides high-level APIs for operations that can be more readily automated. This page contains specifics: https://docs.aws.amazon.com/AmazonS3/latest/dev/uploadobjusingmpu.html

Encryption

Unless it’s intended to be publicly available—perhaps as part of a website—data stored on S3 should always be encrypted. You can use encryption keys to protect your data while it’s at rest within S3 and—by using only Amazon’s encrypted API endpoints for data transfers— protect data during its journeys between S3 and other locations. Data at rest can be protected using either server-side or client-side encryption.

Server-Side Encryption

– The “server-side” here is the S3 platform, and it involves having AWS encrypt your data objects as they’re saved to disk and decrypt them when you send properly authenticated requests for retrieval. You can use one of three encryption options

– Server-Side Encryption with Amazon S3-Managed Keys (SSE-S3), where AWS uses its own enterprise-standard keys to manage every step of the encryption and decryption process

– Server-Side Encryption with AWS KMS-Managed Keys (SSE-KMS), where, beyond the SSE-S3 features, the use of an envelope key is added along with a full audit trail for tracking key usage. You can optionally import your own keys through the AWS KMS service.

– Server-Side Encryption with Customer-Provided Keys (SSE-C), which lets you provide your own keys for S3 to apply to its encryption

Client-Side Encryption

It’s also possible to encrypt data before it’s transferred to S3. This can be done using an AWS KMS–Managed Customer Master Key (CMK), which produces a unique key for each object before it’s uploaded. You can also use a Client-Side Master Key, which you provide through the Amazon S3 encryption client. Server-side encryption can greatly reduce the complexity of the process and is often preferred. Nevertheless, in some cases, your company (or regulatory oversight body) might require that you maintain full control over your encryption keys, leaving client-side as the only option.

Logging

Tracking S3 events to log files is disabled by default—S3 buckets can see a lot of activity, and not every use case justifies the log data that S3 can generate. When you enable logging, you’ll need to specify both a source bucket (the bucket whose activity you’re tracking) and a target bucket (the bucket to which you’d like the logs saved). Optionally, you can also specify delimiters and prefixes to make it easier to identify and organize logs from multiple source buckets that are saved to a single target bucket. S3-generated logs, which sometimes only appear after a short delay, will contain basic operation details, including the following:

– The account and IP address of the requestor

– The source bucket name

– The action that was requested (GET, PUT, etc.)

– The time the request was issued

– The response status (including error code) S3 buckets are also used by other AWS services—including CloudWatch and CloudTrail—to store their logs or other objects (like EBS Snapshots).

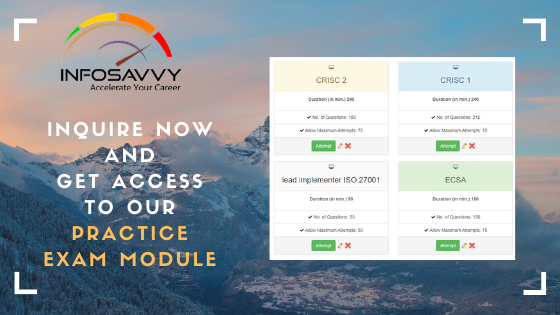

This Blog Article is posted by

Infosavvy, 2nd Floor, Sai Niketan, Chandavalkar Road Opp. Gora Gandhi Hotel, Above Jumbo King, beside Speakwell Institute, Borivali West, Mumbai, Maharashtra 400092

Contact us – www.info-savvy.com