Capability Maturity Model (CMM)

The Capability Maturity Model (CMM) is a framework built to describe guidelines to refine and mature an organization’s software development process. It is based on a previous U.S. Department of Defense process maturity framework that was built to assess the process capability and maturity of software contractors for the purpose of awarding contracts. The Software Engineering Institute (SEI) formalized this process maturity framework into the CMM. As the CMM had been used over time, the industry’s needs of a capability maturity model had exceeded the original CMM’s definition. The CMM then evolved into the Capability Maturity Model Integration (CMMI) framework.

Capability Maturity Model Integration (CMMI)

CMMI is a process-level improvement program created to integrate an assessment and process improvement guidelines for separate organizational functions. The CMMI Institute, a subsidiary of ISACA (https://cmmiinstitute.com/cmmi), sets process improvement goals and priorities and provides a point of reference for appraising current processes. The CMMI framework is oriented toward what processes should be implemented rather than how the processes should be implemented. It describes the procedures, principles, and practices that are part of software development process maturity. The CMMI addresses the different phases of the SDLC, including requirements analysis, design, development, integration, installation, operations, and maintenance.

The CMMI framework addresses these areas of interest:

- Product and service development: CMMI for Development (CMMI-DEV) concentrates on product development and engineering.

- Service establishment, management: CMMI for Services (CMMI-SVC) covers the best practices and key capabilities of service management.

- Product and service acquisition: CMMI for Acquisition (CMMI-ACQ) focuses on responsible acquisition practice.

- People management: People Capability Maturity Model (PCMM) has key capabilities and best practices to improve people management.

These areas of interest are capability maturity models in their own right. The CMMI integrates these areas of concern under the common framework of the CMMI. Each specialized model consists of the core process areas defined in the CMMI framework.

The CMMI provides a detailed elaboration of what is meant by maturity at each level of the CMM. It is a guide that can be used for software process improvement, software process assessments, and software capability evaluations.

Representation

The CMMI has two approaches to process improvement called representations. The CMMI has two types of representations: continuous and staged. Continuous representation evaluates “capability levels.” Staged representation evaluates “maturity levels.” Both capability levels and maturity levels provide a way to improve the processes of an organization and measure how well organizations can and do improve their processes.

The selection of the type of representation or emphasis on capability or maturity that is needed from an organizational perspective depends upon its development or improvement goals. Similarly, this applies to the security goals of an organization, as will be explored in the following “Continuous” and “Staged” sections.

Continuous

The CMMI continuous representation focuses on a specific process area. Continuous representation uses the term capability levels to relate to an organization’s process improvement achievement for individual process areas. Continuous representation is for when an organization focuses on specific processes to improve. An organization can choose the processes to improve based on their business objectives and risk mitigation needs.

Continuous representation can be used to focus on evaluating or improving an organization’s security processes within their overall SDLC processes. Furthermore, a continuous representation can home in on improving specific security capabilities within the security processes, such as the development and usage of security architecture patterns in the secure SDLC.

Staged

CMMI staged representation provides a means to measure the maturity level of an organization. A staged representation is a predefined road map for organizational improvement, which then translates into maturity. It provides a sequence of process improvements, with each serving as a foundation for the next. Maturity levels describe this progress along the improvement road map. A maturity level is a definite evolutionary step toward achieving improved organizational processes. CMMI staged representation allows comparisons within and between organizations by the use of maturity levels. It can serve as a basis for comparing the maturity of different projects or organizations.

In this way, a staged representation can be used to measure the maturity of an organization’s information security management system against competitors or industry reference points. In a similar fashion, it may also be used to compare the secure SDLC against a reference to measure its maturity.

Levels

Levels in the CMMI describe the degrees of progress along the evolutionary paths of capability or maturity. The CMMI levels for process areas, also known as continuous representation, are capability levels. The CMMI levels for maturity, known as staged representation, are maturity levels.

Capability Levels

The capability levels for continuous representation are Incomplete, Performed, Managed, and Defined. These levels represent an organization’s process improvement achievement in an individual process area.

- Incomplete, also known as level zero, indicates a process that is either not per- formed or partially performed.

- Performed, also known as capability level 1, represents a process that is performed and that accomplishes its specific goals.

- Managed, also known as capability level 2, represents a performed process that accomplishes its specific goals and that has been instituted by the organization to ensure that the process maintains its performance levels. Characteristics of a man- aged process are that it is planned and executed according to policy. Processes at this level are monitored, controlled, reviewed, and evaluated for adherence to its process description. These disciplines help to maintain the process effectiveness during times of stress.

- Defined, also known as capability level 3, is a more rigorously defined managed process. Level 3 processes are more consistent than those at the managed level because they are based on the organization’s set of standard processes and are tailored to suit a particular project or organizational unit.

Maturity Levels

Maturity levels describe an organization’s process improvement achievements across multiple process areas. The maturity levels for the staged representation are Initial, Managed, Defined, Qualitatively Managed, and Optimizing.

- Initial, also known as maturity level 1, describes an organization whose processes are ad hoc or chaotic. Despite this, work gets done, but the work is likely over budget and behind schedule.

- Managed, or maturity level 2, indicates that processes at this level are planned and executed according to organizational policy, and the management has visibility on work products at defined points.

- Defined, or maturity level 3, relates to processes that are described in standards, procedures, and methods. Maturity level 3 processes are rigorously defined.

- Qualitatively managed, or maturity level 4, is when an organization uses quantitative objectives for quality and process performance as project management criteria. Statistical techniques are employed to improve the process performance predictability at level Formal measurements are key at this level.

- Optimizing, or maturity level 5, collects data from multiple projects to improve organizational performance as a whole. Level 5 emphasizes continuous improvements through quantitative and statistical methods as well as by responding to changing business needs and technology.

Building Security in Maturity Model (BSIMM)

The Building Security in Maturity Model (BSIMM) is a descriptive, software security– focused maturity model based on actual software security initiatives. It is available under the Creative Commons license. It documents what organizations have actually done, not what security experts would prescribe should be done. The BSIMM is built from hundreds of assessments of real-world security programs. It is regularly updated to reflect actual practices in real software security initiatives. When changes are made to the BSIMM, it is based on observed changes in software development practices and the BSIMM reflects it.

Using a model that describes real software security experiences with data collected from a variety of organizations is powerful. As an evidence-based model, it describes what is being done in industry and what works for organizations for their software security pro- grams. The BSIMM is a source of empirically based guidance and ideas for an organization to use to meet their needs.

Software Assurance Maturity Model (SAMM)

The Software Assurance Maturity Model (SAMM) is a framework to help organizations formulate and implement a security software strategy that is tailored to the specific risks facing the organization. Unlike the BSIMM, the SAMM is a prescriptive framework. The OWASP created and maintains the SAMM.

The SAMM can be used to:

- Evaluate an organization’s existing software security practices

- Build a balanced software security assurance program in well-defined iterations

- Demonstrate concrete improvements to a security assurance program

- Define and measure security-related activities throughout an organization

It provides a framework for creating and growing a software security initiative. For a software security program to be successful, security has to be built in; it cannot be bolted on after the fact.

SAMM Business Functions and Objectives

The structure of the SAMM is based on four business functions. These functions are governance, construction, verification, and operations. For each of these business functions, the SAMM defines three security practices. For each security practice, SAMM defines three maturity levels as objectives.

Governance

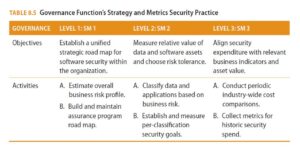

The governance function describes how an organization manages its software development activities. The three security practices of the governance function are strategy and metrics, policy and compliance, and education and guidance. Strategy and metrics direct and measure an organization’s security strategy. Policy and compliance involve setting up an organizational security, compliance, and audit control framework. Education and guidance deliver software development security training.

Construction

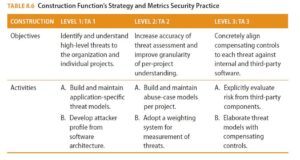

The construction function focuses on the activities and processes of creating software. The three security practices of the construction function are threat assessment, security requirements, and secure architecture. Threat assessment is focused on identifying and characterizing potential attacks against an organization’s software. Information gained from threat assessments is used to improve risk management. Security requirements involve adding security requirements into the software development process. The secure architecture practice is based on promoting secure by default designs and maintaining the security qualities in controlled use of technologies and frameworks.

Verification

The verification function centers on the activities and processes that an organization uses to test and evaluate software to verify its quality, functionality, safety, and security. The verification function’s three security practices are design review, implementation review, and security testing. The design review practice focuses on design artifact inspection to ensure built-in security supports and conforms to the organization’s security policies, standards, and expectations. Implementation review concerns assessment of source code to discover vulnerabilities and associated mitigation activities. Security testing examines running software for vulnerabilities and other security issues and sets security standards for software releases.

Operations

Operations function consists of the processes and activities that an organization uses to manage software releases. The three security practices of the operations function are issue management, environment hardening, and operational enablement. Issue management involves managing both internal and external issues to support the security assurance program and minimize exposures. The environmental hardening practice relates to enhancing the security posture of deployed software by implementing controls on its operating environment. The operational enablement practice involves securely and correctly configuring, deploying, and running an organization’s software.

SAMM Maturity Levels

The SAMM has a maturity level of zero, which represents a starting point. Level 0 indicates that the practice it represents is incomplete.

The three SAMM maturity levels are as follows:

- Initial understanding and ad hoc performance of security practice

- Improved security practice efficiency and effectiveness

- Security practice mastery at scale

Each maturity level for each security practice has objectives that the security practice must meet and activities that must be performed to achieve that level. Objectives and activities are specifically associated with the security practice and level. They represent the minimum state and conditions that a security practice must present to be at that level of maturity.

To illustrate the differences in objectives and activities among security practices and maturity levels, Tables 8.5 and 8.6 compare the governance function’s strategy and metrics security practice against the construction function’s threat assessment security practice.

The Software Assurance Maturity Model: A Guide to Building Security into Software Development, Version 1.5 provides the descriptions of objectives, activities, and the like that are shown in Tables 8.5 and 8.6.

These two tables are examples of how two different business functions’ security practices have the same three maturity levels but have different objectives and activities.

For more information on the SAMM, see The Software Assurance Maturity Model: A Guide to Building Security into Software Development, Version 1.5 (https://www.owasp.org/images/6/6f/SAMM_Core_V1-5_FINAL.pdf).

Maturity Model Summary

Maturity models are useful in determining the level of competency and effectiveness of an organization in its practices. Maturity models are particularly well suited for understanding an organization’s software development capability and maturity. Maturity models apply well to an organization’s security practices and, as you’ve seen from the BSIMM and SAMM earlier, particularly to how security is applied to software development practices.

Maturity models are assessment tools, however. Like most assessment tools, they are a point-in-time understanding of the environment of their concern. Software development security extends well beyond static snapshots. The real test of software development security comes when the software is running and in its operations and maintenance.